Paco Nathan's latest column covers themes that include data privacy, machine ethics, and yes, Don Quixote.

Introduction

Welcome back to our monthly series about data science. I’ve just returned from a lively conference in Spain (¡Guau!) where I noticed how people working in data science on both sides of the Atlantic are grappling with similar issues. Important innovations are emerging from Europe. This month, let’s explore these three themes in detail:

- Data privacy

- Machine ethics

- Don Quixote

Data Privacy

Data privacy has taken a few interesting turns lately, and along with those, compliance, security, and customer experience also reap benefits. Tim Cook, Apple CEO, gave a talk in Brussels in late October about compliance. He warned about the looming “data industrial complex” with our personal data getting “weaponized against us with military efficiency” by the large ad-tech firms, data brokers, and so on. In a subsequent Twitter thread, Cook shared four points about data privacy:

- “Companies should challenge themselves to de-identify customer data or not collect that data in the first place”

- “Users should always know what data is being collected from them and what it’s being collected for”

- “Companies should recognize that data belongs to users and we should make it easy for people to get a copy of their personal data, as well as correct and delete it”

- “Everyone has a right to the security of their data; security is at the heart of all data privacy and privacy rights”

Those points summarize many of the high-level outcomes of the EU General Data Protection Regulation (GDPR) five months after it was introduced. While many firms focus on the risks involved with data privacy and compliance, emerging technologies are making the corollary rewards (i.e. product enhancements) quite tangible.

Irene Gonzálves at Big Data Spain 2018, Reuse in Accordance with Creative Commons Attribution License

I was teaching/presenting at Big Data Spain in Madrid in mid November, the second-largest data science conference in Europe. One talk that stood out was “Data Privacy @Spotify” by Irene Gonzálvez. She gave the first public presentation on how Spotify has implemented the controversial right to be forgotten aspects of GDPR. In brief, Spotify created a new global key-management system called “Padlock” to handle user consent. Any internal app using customer data must first obtain keys from a privacy portal. Conversely, when a customer revokes consent for having their data used, their keys are deleted and their data becomes unreadable. I highly recommend Irene’s video for the full details.

Irene pointed out that data can only be persisted if it is first encrypted. This helps reduce the impact of data leakage. It also enables any single team within Spotify to manage the entire lifecycle of data for a customer. This brings together better data privacy, more proactive compliance, potentially better security, and interesting product enhancements, all in one fell swoop. My takeaway is that we’ll see more big data innovations specifically for privacy, following the lead from firms such as Spotify. These will lead to customer experience enhancement rather than degradation.

Meanwhile, other technologies supporting data privacy and encryption are evolving rapidly. For an excellent overview of contemporary work, see the recent podcast interview “Machine learning on encrypted data” with Alon Kaufman, co-founder and CEO of Duality. Alon describes three complementary approaches for data privacy:

Imagine a system where a machine learning model gets trained on a set of data and then used (inference) on live customer data in production…with the twist that training data gets kept private from the ML model, the model is kept private from the production deployment, and the customer data used for inference stays private too. These three technologies each have trade-offs, some favor one side’s privacy or another’s, yet they can be combined for a robust system.

Duality is a power-house tech start-up, and definitely one to watch closely. Notably, another co-founder is Shafi Goldwasser, winner of the 2012 ACM Turing Award, the Gödel Prize in 1993 and 2001, the 1996 Grace Murray Hopper Award…and the list goes on. If anyone is going to rewrite the fundamentals of computer science, watch Prof. Goldwasser.

For more on how these technologies can work in production use cases, listen to the podcast interview “How privacy-preserving techniques can lead to more robust machine learning models” with Chang Liu of Georgian Partners. Most of these approaches involve injecting noise into the data which has the benefit of potentially producing more general ML models, moving them away from overfitting. However, keep in mind the No Free Lunch theorem ─ added noise may also reduce accuracy.

Dig deeper into the math by checking out these relatively recent papers for details:

- "Differential Privacy and Machine Learning: a Survey and Review”

- “Communication-Efficient Learning of Deep Networks from Decentralized Data”

- “A generic framework for privacy preserving deep learning”

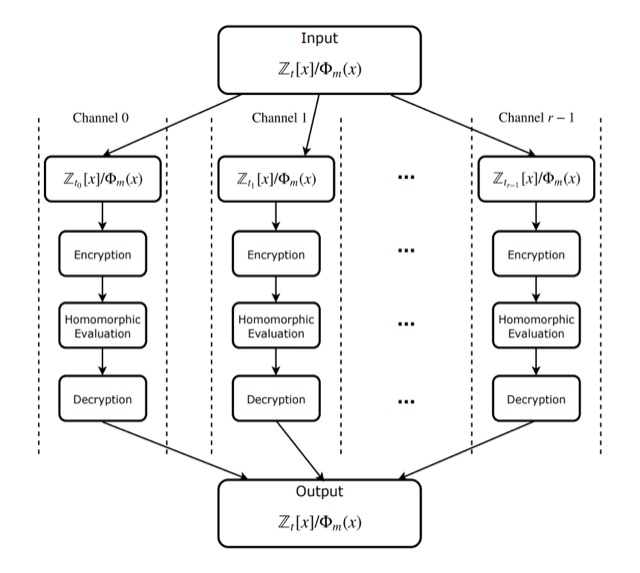

The last paper is related to the rapidly growing PySyft community. So far these kinds of technologies have been computationally expensive to run. However, a recent report points toward potential breakthroughs in performance: “The AlexNet Moment for Homomorphic Encryption: HCNN, the First Homomorphic CNN on Encrypted Data with GPUs”, by Ahmad Al Badawi, Jin Chao, Jie Lin, et al. While previous work in homomorphic encryption used libraries such as SEAL and PySEAL, the Singapore-based research at A*STAR identified bugs in prior research and they also implemented their work on GPUs for improved performance. They report a high security level (> 128 bit) along with high accuracy (99%) which seems eyebrow-raising given the aforementioned matter of injected noise vs. accuracy.

An “arithmetic circuit” used in homomorphic encryption

Admittedly there’s been a scramble recently to seize the now-regrettable, clichéd trope of “The AlexNet Moment for [XYZ],” although it has substance. Cyber attacks are nothing new. The first documented network attack for a financial exploit occurred in France in 1843. Clearly the security threat side of this equation is not going away. Given the emerging technologies – albeit computationally expensive – performance breakthroughs for privacy-preserving techniques will become key factors as innovative technology vendors like Duality change the game, greatly extending practices that consumer companies, such as Spotify, are already using in production.

Meanwhile, as Alon Kaufman and others mentioned, another key component that’s emerging is federated learning. If you’d like to experiment with machine learning on encrypted data, tf-encrypted is a Python library built on top of TensorFlow. Keeping data private when two, three, or more parties are involved is crucial. The conjugate of that multi-party problem is that we also need better ways to distribute machine learning work, especially given how the world is racing toward use cases for edge, IoT, embedded, etc. Another one of my favorite talks from Big Data Spain 2018 was “Beyond accuracy and time” by Amparo Alonso, who leads AI research at Universidade da Coruña. Their team is working on parameter sharing, another compelling alternative. Looking ahead, I’m eager to hear the upcoming talk “The future of machine learning is decentralized” by Alex Ingerman from Google on Thursday, March 28, 2019 at Strata SF.

Machine Ethics

A Chicken Little moment struck earlier this year: the looming GDPR requirements, a seemingly unending stream of reports of major data privacy breaches online, and the shadows cast by the many ethical disasters of Silicon Valley, etc., all happened at once. These factors made the immediate future of data analytics look bleak. How can an organization be “data-driven” if regulators won’t allow you to collect much user data, and meanwhile you’re getting hacked and sued continually about the data you already have?

However, as the say on Wall Street, “Buy the rumor, sell the news.” Months later, innovations for data privacy bring along security benefits and produce better customer experiences. What about other areas of compliance? For instance, can we embrace difficult problems of ethics in data in a similar way, where innovations not only resolve thorny compliance issues but also lead to better products?

Susan Etlinger at AI London

I was fortunate to get to interview Susan Etlinger at The AI Conf in London in October, where our topic was precisely that. Currently the dialog about ethics and data seems to focus on risks, on fears, on regulatory consequences, and frankly, on a sense of bewilderment amongst those who see their entire ecommerce world changing. Can we turn that around and, instead of being driven by risk, build products and services driven by data which are better because of the ethical considerations? ¡Si si si, claro¡ Check out my video interview with Susan.

One key takeaway is it’s impossible to get completely “fair” decisions – life doesn’t work that way – let alone perfectly fair ML models. Leadership in companies must understand this point and recognize that systems deployed with ML are going to err in particular directions (hopefully not harming the customers, the public, and so on), and, as leaders, own those decisions. Own them, be proud of them, proclaim them, make noise. Be transparent instead of keeping those decisions occluded. This is a path toward better data ethics in practice for organizations.

To that point, Google and DataKind have a $25 million call for proposals for AI used to tackle tough social and environmental problems. Do some good, make some noise about it, and win grants. Not a bad deal.

Of course, perfection doesn’t occur often (except for Perfect Tommy in Buckaroo Bonzai). For a good hard look at the math for why perfect ML fairness is a form of unobtainum, check out the recent podcast interview “Why it’s hard to design fair machine learning models” with Sharad Goel and Sam Corbett-Davies at Stanford. To wit, fairness requires many considerations, many trade-offs, and many variables being optimized. This interview covers several great techniques for ML model fairness such as anti-classification, classification parity, calibration, etc. The net effect is, while these are good approaches, requiring them as blanket policy will tend to harm marginalized groups. Which is essentially the opposite of fairness. Moreover, malicious actors can exploit just about any of these approaches. It’s a serious mistake to attempt to quantify fairness or automate the process of oversight. Models must be analyzed, multiple factors must be considered, judgements must be made. This gets into the unbundling of decision-making (more about that in just a bit). To dig deeper, check out Sharad and Sam’s paper: “The Measure and Mismeasure of Fairness: A Critical Review of Fair Machine Learning,” which includes excellent examples that illustrate their arguments.

Extending these arguments, the journal Nature recently featured the article “Self-driving car dilemmas reveal that moral choices are not universal.” Sit down first before reading these conundrums about “machine ethics,” which are based on values surveyed worldwide on what self-driving cars should do in life-and-death dilemmas. Clusters of Western vs. Eastern vs. Southern values reveal the impact that social and economic factors wield on moral norms. For example, how does income inequality, or the relative strength of government institutions, change the very definitions of “ethical” choices? Some cultures believe that automated cars should choose to spare the law-abiding, some would spare the young, while others want to spare people with higher social status. In translation, if you’re an elderly, working class person with even a minor police record, avoid crosswalks on most continents, or else the robots will come and hunt you down! Clearly, there are no perfect rules for AIs to abide by.

Don Quixote

Like dear Alonso Quixano in Cervantes’ early 17th century novel Don Quixote, I experienced a few adventures and surprises at Big Data Spain in Madrid last month. Following my last talk, the MC insisted that I walk barefoot and blindfolded on broken glass. Then, late one night a good friend and I dropped into Café Gijón, a quaint 19th century coffeehouse favored by famous Spanish poets when Jorge Bedoya and his actress sister followed us inside and promptly seized the baby grand for an impromptu virtuoso performance of Flamenco style music on piano. The place erupted. ¡Magnifico!

Central Madrid at Night

However, the most surprising cultural treat was a visit to Restaurante El Tormo where they feature hand-made dishes described in Don Quixote. Granted, some of those dishes were already centuries old at the time of Cervantes’ writing. The proprietors are an older couple (doing some quick math based on the photos along the wall, I figure they must both be in their late 70s or older) who have lovingly researched historical recipes over decades, recreating long-lost techniques. If you reach this part of the world, do not miss El Tormo. Check out these dishes! BTW, “lunch” takes about 4 hours to complete, so plan accordingly.

Part of the beauty of Don Quixote is that in the intervening four centuries since it was first published, generations have struggled to identity the author’s intent. Is it a story of an old man raised on chivalric notions, bewildered by a world that’s changing dramatically amidst the Renaissance? Is it a cynical satire of orthodoxy and nationalism? Does it attempt to prove how individuals can be right while society is wrong? No se.

Regarding ethics and privacy, Susan Etlinger and I recently participated in a workshop at the World Economic Forum (WEF) where they were preparing the AI agenda for next year’s Davos. We were part of a group of 30 experts in the field grappling with Don Quixote-esque issues. One outcome is the Empowering AI Leadership toolkit targeted at BoD members in enterprise firms, to help them understand the crucial but non-intuitive issues which data science implies.

The most senior decision-makers at many established companies have matured in their careers with notions such as Six-Sigma, Lean Startup, and Agile were the philosophies in vogue for technology management. As a result, they learned to consider automation as something inherently deterministic and they were drilled regarding uncertainty as a problem to be eradicated from business processes.

However, the world has changed abruptly and senior decision-makers may find themselves feeling a little like Alonso Quixano trying to make sense of the dramatic changes around him. Nearly half a millennium after Don Quixote was published we might call our current times “Renaissance 2.0”, or something. Now we have probabilistic systems which share in decision-making. As an organization progresses through the typical stages of maturity in their analytics capabilities — descriptive, diagnostic, predictive, prescriptive — gradually more and more of the decision-making shifts toward automated processes.

Today we have good notions of how to train individual contributors for careers in data science. We’re beginning to articulate clear ideas of how to lead data teams effectively. However, the nuances of executive decision making in the context of data science and AI are still a bit murky. The interactions between a board of directors and an executive team regarding data-related issues are mostly in disarray. Like Quixano, they are “tilting at windmills” here (i.e. fighting imaginary enemies).

So bottleneck issues are moving up to exec and BoD levels. Board members schooled on Six Sigma, Agile, etc., are not adequately prepared for judgements regarding AI use cases. They must now consider stochastic systems (read: not deterministic) where uncertainty gets leveraged and the nature of risks has changed. Frankly if they don’t act, the competition (or federal regulators, or opposing legal teams, or consumer advocates, or cyber attackers) will.

WEF is attempting to assist BoD members with this issue head-on by previewing their AI Toolkit at Davos 2019… Afterwards, WEF and friends will work to get this resource evangelized through board level networks worldwide.

Art in Hallway at World Economic Forum, SF

Oddly enough, while we’ve thought of machine learning as a way to generalize patterns from past data, it turns out to that machine learning has a direct relationship to the heart of business theory. Looking back at one of the canonical business texts of the past century, Frank Knight’s Risk, Uncertainty, and Profit (published in 1921) established crucial distinctions between identifiable risks (where one buys insurance) and uncertainty (where profit opportunities exist). Machine learning turns out to be quite good at identifying regions of uncertainty within a dataset as that data gets used to train ML models. That opens the door for variants of semi-supervised learning: models handle predictive analytics where they have acceptable confidence in their predictions, otherwise they refer the provably uncertain corner cases over to human experts on the team. Every business problem should now be viewed as something to be solved by teams of people + machines. In some cases there will be mostly people, while in others there will be mostly machines. In any case, always start from a baseline of managing teams of both. Moreover, leverage the feedback loops between them: where your people and machine learning models learn from each other.

There was an excellent article from McKinsey & Company earlier this year, “The Economics of Artificial Intelligence” by Ajay Agrawal, which points to a need to unbundle the processes of decision-making within organizations. I cannot find any single more important point to stress for those organizations which are just coming into the data space. This is the antidote for contemporary Quixotes, those choleric knights-errant who may be tilting at windmills due to serious conundrums about data, privacy, ethics, security, compliance, competition, and so on. Executives and boards members who grew up on Six Sigma must now re-learn how to think alongside automated, probabilistic systems. Decisions may come from a gut level (reactiveness) or from more expensive investments of personal energy (cognition). What we have learned in 2018 is that to thrive in a world where the competition is leveraging AI, we must move more of the reactive, rapid decisions over to mostly machines while moving the more expensive cognition over to people. Some organizations try to start the other way around, which fails.

Another great resource is the bookThinking Fast and Slow, by Nobel laureate Daniel Kahneman, which outlines the ways in which people typically fail at decision-making. We’re biologically wired to make decisions as individuals; for example bad news gets prioritized over good news to help us stay alive in a harsh, competitive world. Perceived losses get accounted much more strongly than likely gains. Kahneman points out that our evolutionary wiring which compels individuals to attempt to survive long enough to reproduce simply does not work for large organizations making decisions. Especially when we’re talking about complex decisions and long-term strategies, where implications will cross many varied cultures and continents. Ergo, we’ve come to leverage data science as a way to inform organizations, working around common forms of cognitive bias that human decision-makers generally elicit in practice. One problem: the very top ranks of large organizations are still grappling with the implications of “Renaissance 2.0” and how to team up with machines.

“Fast Food” at Madrid Airport

The unbundling of decision-making is one of the most important points about data science today. Understand how your organization makes decisions. Where are points in your business process where immediate gut reactions are vital? Where are points where more thoughtful consideration must occur? Where do machines fit into that picture? Because now they must.

Here are some excellent presentations that explore decision “unbundling” issues in much greater detail:

- “From big data to AI: Where are we now, and what is the road forward?” ─ George Anadiotis, ZDnet

- “The missing piece” ─ Cassie Kozyrkov, Google

- “How social science research can inform the design of AI systems” ─ Jacob Ward, Stanford

- “A new vision for the global brain: Deep learning with people instead of machines” ─ Omoju Miller, GitHub

In the latter talk, Omoju takes a typical enterprise org chart, rotates it 90 degrees, then considers how much that diagram looks and (should) function as a deep neural network architecture. That’s an excellent point to muse upon: what can we learn from how organizations of artificial neural networks have decision making distributed across layers, connection pools, embeddings, etc., and what techniques may be borrowed on behalf of enterprise teams? This is the opposite of tilting at windmills.

Upcoming Events

In November, we had a lively panel discussion “Data Science Versus Engineering: Does It Really Have To Be This Way?” at Domino HQ in San Francisco. I’ll defer to the detailed write-up, but my favorite takeaway was Pete Warden, describing part of the process for planning the development of ML models and product expectations at Google – something they call Wizard of Oz-ing. To paraphrase, have a person stand behind a curtain who plays the role of an anticipated ML model, using the training data to answer questions. Then have a small audience interact with a mock product, as if they are interacting with a wizard behind the curtain. If that role-playing wiz encounters troubles, imagine how difficult training the corresponding ML model might be? It’s a great approach for level-setting expectations – again, for teams of people + machines in production.

Speaking of leadership in data science and the “unbundling” of decision-making process, mark your calendars for the Rev conference in NYC, May 15-16, where we’ll focus on how to lead data science teams and be effective within organizations. We’ll emphasize teams learning from each other throughout the program and feature talented leaders in data analytics, including keynotes from two people I really look up to in this field: Michelle Ufford (Netflix) and Tom Kornegay (Nike). Watch for the “Call for Proposals” to open soon in December.

Meanwhile, there aren’t many data science events scheduled for the remainder of 2018. Please see the new Conference Watchlist for a curated list of conferences about data, machine learning, AI, etc. We’ll continue to highlight important upcoming events here.

Happy Holidays to you and yours!

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.