Subject archive for "model-development," page 3

Themes and Conferences per Pacoid, Episode 9

Paco Nathan's latest article features several emerging threads adjacent to model interpretability.

By Paco Nathan29 min read

Addressing Irreproducibility in the Wild

This Domino Data Science Field Note provides highlights and excerpted slides from Chloe Mawer’s "The Ingredients of a Reproducible Machine Learning Model" talk at a recent WiMLDS meetup. Mawer is a Principal Data Scientist at Lineage Logistics as well as an Adjunct Lecturer at Northwestern University. Special thanks to Mawer for the permission to excerpt the slides in this Domino Data Science Field Note. The full deck is available here.

By Ann Spencer7 min read

Manipulating Data with dplyr

Special thanks to Addison-Wesley Professional for permission to excerpt the following "Manipulating data with dplyr" chapter from the book, Programming Skills for Data Science: Start Writing Code to Wrangle, Analyze, and Visualize Data with R. Domino has created a complementary project.

By Domino40 min read

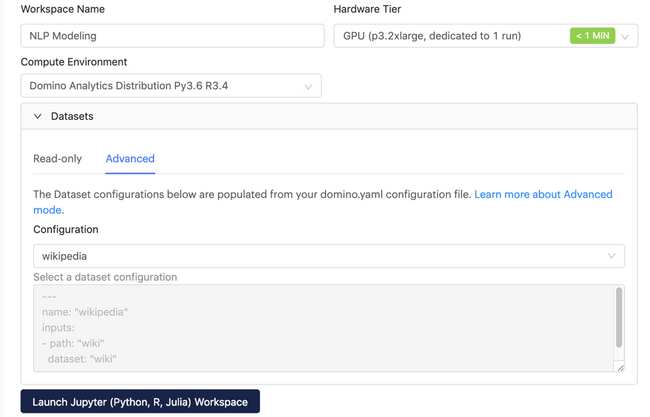

Domino 3.3: Datasets and Experiment Manager

Our mission at Domino is to enable organizations to put models at the heart of their business. Models are so different from software — e.g., they require much more data during development, they involve a more experimental research process, and they behave non-deterministically — that organizations need new products and processes to enable data science teams to develop, deploy and manage them at scale.

By Domino5 min read

Themes and Conferences per Pacoid, Episode 7

Paco Nathan covers recent research on data infrastructure as well as adoption of machine learning and AI in the enterprise.

By Paco Nathan21 min read

Model Interpretability with TCAV (Testing with Concept Activation Vectors)

This Domino Data Science Field Note provides very distilled insights and excerpts from Been Kim’s recent MLConf 2018 talk and research about Testing with Concept Activation Vectors (TCAV), an interpretability method that allows researchers to understand and quantitatively measure the high-level concepts their neural network models are using for prediction, “even if the concept was not part of the training". If interested in additional insights not provided in this blog post, please refer to the MLConf 2018 video, the ICML 2018 video, and the paper.

By Domino6 min read

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.