Last week, Paco Nathan referenced Julia Angwin’s recent Strata keynote that covered algorithmic bias. This Domino Data Science Field Note dives a bit deeper into some of the publicly available research regarding algorithmic accountability and forgiveness, specifically around a proprietary black box model used to predict the risk of recidivism, or whether someone will “relapse into criminal behavior”.

Introduction

Models are at the heart of data science and successful model-driven businesses. As the builders and stewards of models, data scientists are often at the forefront of public debate on appropriate measures, accuracy, and potential bias because these aspects directly impact how models are developed, deployed and evaluated. This public debate and iteration upon each others' work continues to push all of us within data science to be analytically rigorous and continually improve. This post provides additional context regarding the existing debate and research around algorithmic accountability, forgiveness, and bias that Paco Nathan covered last week.

Identifying Algorithmic Forgiveness

Over the past couple of years, Julia Angwin has amplified ProPublica findings regarding bias within, COMPAS (Correctional Offender Management and Profiling Alternative Sanctions), a proprietary commercial algorithm that predicts the risk of recidivism. While Paco Nathan referenced Angwin's algorithmic bias-oriented Strata keynote in September, the entire keynote is not publicly available at the time of this post's publication.

However, similar findings were presented within MIT Media Lab’s publicly available March 2018 “Quantifying Forgiveness: MLTalks with Julia Angwin and Joi Ito” as well as other research cited in this post. Within the research and talks, Angwin argued for revealing machine learning (ML) bias within the black box model by asking the questions “who is likely to be classified as a lower risk of recidivism?”, “who is more likely to be forgiven?”, as well as reviewing the ML outcomes. A couple of Angwin and ProPublica key findings in 2016 include

- “Black defendants were often predicted to be at a higher risk of recidivism than they actually were. Our analysis found that black defendants who did not recidivate over a two-year period were nearly twice as likely to be misclassified as higher risk compared to their white counterparts (45 percent vs. 23 percent).”

- “White defendants were often predicted to be less risky than they were. Our analysis found that white defendants who re-offended within the next two years were mistakenly labeled low risk almost twice as often as black re-offenders (48 percent vs. 28 percent).”

These findings illustrate that white defendants were more likely to be the recipients of algorithmic forgiveness. Yet, the findings also sparked conversations about which measures should be considered when analyzing the data. Data scientists that require more in-depth insights regarding identifying algorithmic forgiveness than provided thus far may want to consider watching the video clip (adapted and reused according to the creative commons attribution license) of the MIT Media Lab MLTalk, reading “How we analyzed the COMPAS recidivism algorithm” as well as downloading the data. Angwin is listed as a co-author on the research.

Algorithmic Accountability: Which Measures to Consider?

In 2016, Angwin et al at ProPublica argued for focusing on the error rates of false positives and false negatives. Northpointe, the owner of COMPAS, counter-argued for focusing on whether the program accurately predicted recidivism. Then, a group of academics argued in the Washington Post that the measure under consideration was really “fairness” and as

“Northpointe has refused to disclose the details of its proprietary algorithm, making it impossible to fully assess the extent to which it may be unfair, however inadvertently. That’s understandable: Northpointe needs to protect its bottom line. But it raises questions about relying on for-profit companies to develop risk assessment tools.”

However, discussions, debate, and analysis did not end in 2016. In 2018, Ed Yong covered Julia Dressel and Hany Farid from Dartmouth College on their study on how “COMPAS is no better at predicting an individual’s risk of recidivism than random volunteers recruited from the internet.” Yong indicated that Dressel identified yet another measure to consider:

“she realized that it masked a different problem. ‘There was this underlying assumption in the conversation that the algorithm’s predictions were inherently better than human ones,’ she says, ‘but I couldn’t find any research proving that.’ So she and Farid did their own.”

In the study and paper, Dressel and Farid indicated

“While the debate over algorithmic fairness continues, we consider the more fundamental question of whether these algorithms are any better than untrained humans at predicting recidivism in a fair and accurate way. We describe the results of a study that shows that people from a popular online crowdsourcing marketplace—who, it can reasonably be assumed, have little to no expertise in criminal justice—are as accurate and fair as COMPAS at predicting recidivism. ….we also show that although COMPAS may use up to 137 features to make a prediction, the same predictive accuracy can be achieved with only two features, and that more sophisticated classifiers do not improve prediction accuracy or fairness. Collectively, these results cast significant doubt on the entire effort of algorithmic recidivism prediction.”

Machine Learning Model Implications in the Real World

While arguments regarding selecting appropriate measures to evaluate models are important, evaluating real world implications once “models are in the wild” are also important. Data scientists are familiar with models performing differently “in the wild” as well as their models being leveraged for a specific use case that they were not originally developed for. For example, Tim Brennan, one of the creators of COMPAS, did not originally design COMPAS to be used in sentencing. Yet, COMPAS has been cited for use within sentencing.

Sentencing

In 2013, Judge Scott Horne cited COMPAS during consideration of appropriate sentencing and probation regarding Eric Loomis. Loomis appealed on the grounds that using a proprietary algorithm violates due process. The Wisconsin Supreme Court ruled against the appeal and the following excerpt is from the State of Wisconsin v. Eric Loomis

"18 At sentencing, the State argued that the circuit court should use the COMPAS report when determining an appropriate sentence:

In addition, the COMPAS report that was completed in this case does show the high risk and the high needs of the defendant. There's a high risk of violence, high risk of recidivism, high pretrial risk; and so all of these are factors in determining the appropriate sentence.

19 Ultimately, the circuit court referenced the COMPAS risk score along with other sentencing factors in ruling out probation:

You're identified, through the COMPAS assessment, as an individual who is at high risk to the community.

In terms of weighing the various factors, I'm ruling out probation because of the seriousness of the crime and because your history, your history on supervision, and the risk assessment tools that have been utilized, suggest that you're an extremely high risk to re-offend."

Who is more likely to be forgiven?

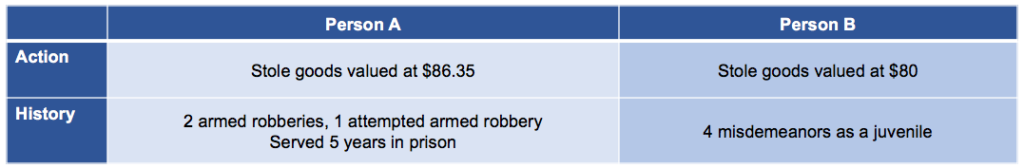

ProPublica highlights a comparison example of two people arrested for petty theft as evidence of bias. While the following is limited detail, which person do you think would have the higher score for recidivism?

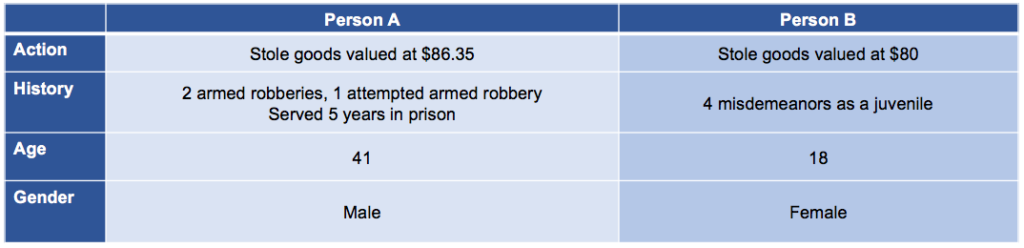

If age and gender attributes were added, would you change your assessment?

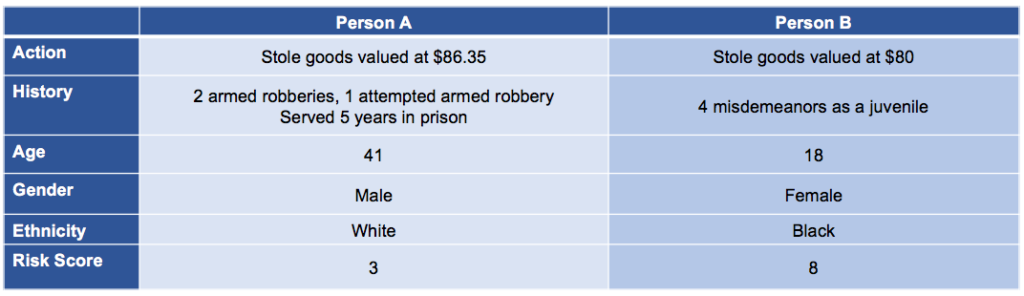

The following table includes an ethnicity attribute and the assigned risk assessment score.

According to the COMPAS predictive risk assessment model, Person B has a higher likelihood of committing recidivism. As part of the ProPublica study, researchers reviewed data two years after classification to assess whether outcomes matched the prediction. Two years after the classification, ProPublica noted that

“we know the computer algorithm got it exactly backward. Borden [Person B] has not been charged with any new crimes. Prater [Person A] is serving an eight-year prison term for subsequently breaking into a warehouse and stealing thousands of dollars’ worth of electronics.”

Person B was a false positive. Person A was a false negative. Or, Person A was more likely to be forgiven by the algorithm than Person B. Additional nuance is provided in the clips of the MIT Media Lab MLTalk and reused according to the creative commons attribution license,

When queried about various measures to consider when analyzing the data set at the MIT Media Lab ML Talk, Angwin indicated that

“it's a semantic argument. We are pointing out that they've chosen a definition of fairness that has this disparate impact in the error rate, and they're saying, "Well, that's not a fair thing because if you change the error rate, you would change this optimization for fairness at predictive accuracy.", but I feel like in the criminal justice context to say that you're totally fine with false positives when the whole point of due process is actually the default to innocence.”

Conclusion

Models are at the heart of the most successful businesses. As data scientists make models, they address many technical and non-technical challenges that touch multiple disciplines. Addressing challenges that touch multiple aspects, including people, processes, and technology, requires the ability to go deep into the data weeds as well as pull oneself out of the data weeds to actualize broader implications. Just a few challenges facing modern data scientists when developing, deploying, and evaluating models include identifying algorithmic bias, deciding whether or not to trust their models, considering the tradeoffs between model accuracy versus model interpretability, reproducibility, and real-world implications of bias. This blog post focused on research, insights, and implications to consider when developing predictive risk assessment models. If you are interested in additional resources, the following resources were reviewed for this post:

ProPublica Research

- Data

- Angwin, Julia. “Making Algorithms Accountable”. ProPublica, August 2016.

- Angwin, Julia and Larson, Jeff. “Bias in Criminal Risk Scores Is Mathematically Inevitable Researchers Say”. ProPublica, December 2016.

- Angwin, Julia et al. “Machine Bias”. ProPublica, May 2016.

- Larson, Jeff et al. “How We Analyzed the COMPAS Recidivism Algorithm”. ProPublica, May 2016.

Talks

- Angwin, Julia. “Quantifying Forgiveness”. O’Reilly Media, September 2018. Note: the entire video is behind a paid gate.

- Angwin, Julia. “What Algorithms Taught Me About Forgiveness”. MozFest 2017. Open Transcripts, October 2017.

- Angwin, Julia and Ito, Joi. “Quantifying Forgiveness: ML Talks with Julia Angwin and Joi Ito”. MIT Media Lab, March 2018.

Articles, Papers, and Cases

- Corbett-Davies, Sam et al. “A computer program used for bail and sentencing decisions was labeled biased against blacks. It's actually not that clear”. The Washington Post, October 2016.

- Dieterich, William et al. "COMPAS Risk Scales: Demonstrating Accuracy Equity and Predictive Parity." NorthPointe Inc Research Department, July 2016.

- Flores, Anthony W. et al. “False Positives, False Negatives, and False Analyses: a Rejoiner to ‘Machine Bias: There’s Software Used Across the Country to Predict Future Criminals. And it’s Biased Against Blacks.” 2017.

- Hardt, Moritz et al. “Equality of Opportunity in Supervised Learning”. 2016.

- Spielkam, Matthias. "Inspecting Algorithms for Bias”. MIT Technology Review, June 2017.

- State of Wisconsin v. Eric Loomis. U.S. Supreme Court of Wisconsin. 2016. Case No.: 2015AP157-CR.

- Yong, Ed. “A Popular Algorithm Is No Better at Predicting Crimes Than Random People”. The Atlantic, January 2018.

Domino Data Science Field Notes provide highlights of data science research, trends, techniques, and more, that support data scientists and data science leaders accelerate their work or careers. If you are interested in your data science work being covered in this blog series, please send us an email at writeforus@dominodatalab.com.

Domino Data Lab empowers the largest AI-driven enterprises to build and operate AI at scale. Domino’s Enterprise AI Platform unifies the flexibility AI teams want with the visibility and control the enterprise requires. Domino enables a repeatable and agile ML lifecycle for faster, responsible AI impact with lower costs. With Domino, global enterprises can develop better medicines, grow more productive crops, develop more competitive products, and more. Founded in 2013, Domino is backed by Sequoia Capital, Coatue Management, NVIDIA, Snowflake, and other leading investors.

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.